For various reasons, i have two mp3 players. I've had both units for more than a year. In fact, both units are no longer sold as new. Even though they both have excellent sound, I really only use one of them. Try to guess which one it is after the descriptions below.

One is the original Apple iPod Shuffle. It looks like a white pack of gum with a rounded rectangle look. It has a button in a ring. The button is the play/stop button. The ring has forward a track and (hold it down) fast forward, back a track and (hold it down) rewind, volume up and volume down. There's a blinking light indicating it's on, etc. I mostly ignore it. On the back, it has a three position switch which gives you power off, sequential play and shuffle mode. There's a button which lets you check the state of the internal battery. The light shows green, amber or red.

The unit has a USB connection. You mount it on some computer, add tracks, and delete tracks. You need software which knows how to update the database on the device, otherwise, the device won't play it. I use

gnupod, since other software for the iPod doesn't really work for Linux. This software works on the command line, has awful syntax, but this can be fixed with aliases and such in your shell's startup file. The key is that it works. I could say that again, but you'd get bored. You can store things on it that aren't music, for example to copy things from one place to another. Inside, there is half a GB of flash. That's enough for 12 to 20 hours of content, depending on encoding, quality, etc. While you're connected, the battery charges. I don't think about the battery unless i'm going on a long trip. It usually works. But for long trips, the limit is 18 hours of use on a full charge. I haven't tested this lately.

If you pause the unit, it will eventually turn itself off. When you turn it on, it sometimes remembers where you were in a track, and sometimes it skips to the beginning of the track. Sometimes it forgets the track, and skips to the "first track". This is the same as the first track your computer reports is on the device. Sometimes, the software records that you deleted a track, but the track isn't actually deleted. It takes up space, but isn't accessible. It can be hard to figure out which track it is, or even notice that it has happened. The tracks are always renamed with a "1_" prefix added. Sometimes, part of the file names are removed. It might have something to do with the DOS FAT 32 filesystem, and name length limitations or name mangling. Hey, blame it on Micros~1.

The other unit was on sale at

Radio Shack, and is called

My Musix. It is black, rounded everywhere, slightly fatter than the Shuffle, but otherwise comparable in size. On the front is a fairly large dot matrix display, which tells you what you're doing. There is a big play/stop button. There is a forward track and reverse track rocker, and if you hold them, you get fast forward/reverse. There is a volume up and volume down rocker. There is a record button. There is device lock slider switch. Lock it, and other controls will not respond. Handy for preventing error.

Turn the unit on by pressing the Play button. Turn it off by pressing (and holding) the Play button. Play by pressing Play, right? Well, that depends on what mode you're in. Mostly, i press the Record button first, which puts it into a mode menu. Modes let you change folders, each which might have different content, like a book in one, and space music in another, and podcasts in a third. Have as many as you like. Another is Play mode, where you can opt for sequential or random play. Another mode is Delete. You can delete tracks from the interface. Another mode is Repeat - including none, one track, one folder. Another mode is FM Radio. You can tune in FM stations. You can even record FM. Play the FM later, or download it to your main computer. Another mode is Record. You can record ambient audio. The sound is recorded with 8 bit samples at 8 KHz. Not very high quality. There are two levels of menu. The settings menu has a mode for setting the screen backlight. You can use a red backlight - which is good for astronomy, as it doesn't ruin your night vision.

The unit has a USB connection. You mount it on some computer, add tracks, and delete tracks. No special software. When the device turns on, it figures out what it has, and lets you play it. You can store things on it that aren't music, for example to copy things from one place to another. It ignores things it doesn't understand. Inside, there is one GB of flash. That's enough for 24 to 60 hours of content. That's enough that you can stuff content in a folder for reference. It uses a AAA battery. I use rechargeable batteries. It gets about 8 hours on a charge, but you can bring more batteries with you, so you get infinite duration with minimal preplanning. If you don't preplan, there's always 7/11. And if your rechargeable battery dies a final death, you can always pick up more at the grocery store. The design of the battery cover door suggests that you'll misplace it sometime. So far, i've been careful, and though i've lost it twice, i've also quickly found it again. It's a nuisance when you have to pull off the road to fish under the seat. Probably should have pulled over to change the battery. Think safety first.

If you pause the unit, it will eventually turn itself off. When you turn it on, it sometimes remembers where you were in a track, but always skips to the beginning of the track. Sometimes it forgets the track, folder and everything. You usually get to the first track in a random folder. Well, it's random to me. It even forgets the track when you actually turn it off gracefully, sometimes. It sometimes suffers from the Micros~1 problem. However, it doesn't, in general, rename files. Though long folder names sometimes get renamed to eight characters. This summer, i loaded Lord of the Rings audio book tracks on it, and the unit was bricked. I called customer support, and got software that let me reflash it. The new software fixed the

unusual characters in the filenames can brick the unit problem, and cleaned up some display bugs too. I now have the software, and can use it any time to unbrick the unit. Since the unit behaves so much better, i'm actually glad i had to reflash it. In fact, if they had a registration system, and could, for example, send you email when new operating software was available, it would be worth signing up for spam. In any case, the customer support rocked. It's hard to remember when i've dealt with good customer support before.

Note that both units have their faults. The Apple Shuffle needed to keep the form factor, fix rewind so that it can hack into the end of the previous track, fix it so that it remembers where it is in a track when it is turned off, and remove the "off" position, letting it toggle between sequential and shuffle mode only. The form factor is fine - it didn't need to be made smaller. I'd offer it in multiple colors, not just white. Of course, then i'd buy the unpopular polka dot version at discount.

The My Musix needs to have a single menu, not two nested menus. It needs to remember where it was when turned off. If it's going to record, it needs to be high quality 16 bit sound with at least 22 KHz samples, preferably CD rate samples. Mono is OK. The battery cover design needs fixing. The display shows the current track. But if the track title doesn't fit in the display, it scrolls. It starts scrolling instantly, which doesn't give you time to consume the first few characters. Just a couple second pause before scrolling would fix this. It should be offered in multiple colors, not just black. Of course, then i'd buy the unpopular polka dot version at discount.

OK, so which one do i use? Simple interface, half storage? Or complicated interface, replaceable batteries? The answer is that i nearly never use the My Musix. But one day the battery in the Shuffle will die, and i'll switch to the My Musix, and use it for twenty years. And i'll likely pine for the good old days when life was simple.

For various reasons, i have two mp3 players. I've had both units for more than a year. In fact, both units are no longer sold as new. Even though they both have excellent sound, I really only use one of them. Try to guess which one it is after the descriptions below.

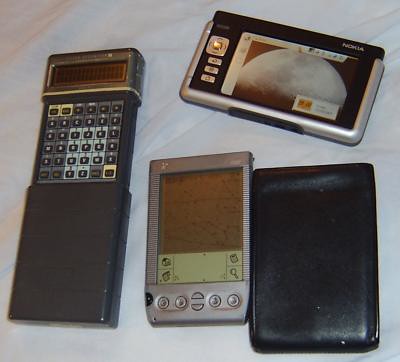

For various reasons, i have two mp3 players. I've had both units for more than a year. In fact, both units are no longer sold as new. Even though they both have excellent sound, I really only use one of them. Try to guess which one it is after the descriptions below. In 1987, i picked up two Psion II Organisers. They were really hot when they came out! They look pretty much the same as each other, but one has 32 KB RAM, and the other has 8 KB RAM. RAM is used for file storage too, but internal RAM has some special features. There are two slots for expansion memory for file storage. They come in two flavors. RAM with a watch battery for persistence. And EEPROM. The Psion can write to it. But you can't rewrite. This can be overcome by marking records for deletion. When it becomes full, copy the stuff somewhere else, erase the pack, then copy stuff back to it. This is how you get the space back from deleted files. They also came with an RS-232 serial cable, and you can copy files to a host, like DOS, back and forth. This is how backups are done. The 8 KB system has some limitations. It can't use my 32 KB RAM pack. You must have 4 KB RAM free to use the serial port. They also can make beeping sounds. Pitch and duration are controllable, so i wrote a music program. Sounds like one of those birthday cards that you open and it makes noise. But, you can write your own tunes, with multiple octaves, repeats, etc. The display is two lines of 16 characters. There are user defined characters, giving one almost pixel by pixel control.

In 1987, i picked up two Psion II Organisers. They were really hot when they came out! They look pretty much the same as each other, but one has 32 KB RAM, and the other has 8 KB RAM. RAM is used for file storage too, but internal RAM has some special features. There are two slots for expansion memory for file storage. They come in two flavors. RAM with a watch battery for persistence. And EEPROM. The Psion can write to it. But you can't rewrite. This can be overcome by marking records for deletion. When it becomes full, copy the stuff somewhere else, erase the pack, then copy stuff back to it. This is how you get the space back from deleted files. They also came with an RS-232 serial cable, and you can copy files to a host, like DOS, back and forth. This is how backups are done. The 8 KB system has some limitations. It can't use my 32 KB RAM pack. You must have 4 KB RAM free to use the serial port. They also can make beeping sounds. Pitch and duration are controllable, so i wrote a music program. Sounds like one of those birthday cards that you open and it makes noise. But, you can write your own tunes, with multiple octaves, repeats, etc. The display is two lines of 16 characters. There are user defined characters, giving one almost pixel by pixel control. I did a wider search for bliss, and discovered that the Nokia 770 (in process of being replaced by the newer, bigger Nokia 800) has been heavily discounted, and appears in my price range. It has some new capability. It combines the functionality of my old subnotebook, but with the portability and applications of an organizer. It runs Linux, like my desktop, though that isn't evident from the casual look. Linux is under the hood, and i can get at it. It has the power and resources to do things even a new Palm won't do. Here's a comparison:

I did a wider search for bliss, and discovered that the Nokia 770 (in process of being replaced by the newer, bigger Nokia 800) has been heavily discounted, and appears in my price range. It has some new capability. It combines the functionality of my old subnotebook, but with the portability and applications of an organizer. It runs Linux, like my desktop, though that isn't evident from the casual look. Linux is under the hood, and i can get at it. It has the power and resources to do things even a new Palm won't do. Here's a comparison: It was

It was

When

When  On the way home from Bible Camp, my ten year old son and i listened to a 4 hour book downloaded from Librivox.

On the way home from Bible Camp, my ten year old son and i listened to a 4 hour book downloaded from Librivox.  So, the canoe and bike rack was last set up on the previous car. It's a fairly fancy

So, the canoe and bike rack was last set up on the previous car. It's a fairly fancy  The new buzz is that one of the women at work got a new iPhone over the weekend. She's been goofing with it, and showing it off, pretty much as one might expect. In fact, lunch got moved back quite a bit. Not just for her, but for many of us.

The new buzz is that one of the women at work got a new iPhone over the weekend. She's been goofing with it, and showing it off, pretty much as one might expect. In fact, lunch got moved back quite a bit. Not just for her, but for many of us. We have a thunderstorm, right now, raging outside. I'm glad i closed my windows.

We have a thunderstorm, right now, raging outside. I'm glad i closed my windows. I'm just a bit behind in my podcast listening queue. At the beginning of April,

I'm just a bit behind in my podcast listening queue. At the beginning of April,